Music Datasets for Machine Learning

Explore the World of Music in Your Next ML Project

The use of machine learning models to interpret and create music started as early as the 1960's. As computer hardware has improved, so too has the complexity of machine learning applications for musicians and music listeners alike. But where does the data used to teach these models come from? In this article, we’ll look at a number of recent machine learning projects and what data sets were used to train these innovative applications.

Background

Using a computer and a pattern-recognition program to create an original music composition was first demonstrated by Ray Kurzweil in 1965 on the television show “What’s My Secret”. The source data, melodies from just one composer, was hand entered into a program written in Fortran.

An automated music transcription application was created in 1977 at the University of Michigan. Their dataset was of a flute recorded on a reel-to-reel tape. It was then converted using an analog to digital converter into a data file. Each file was only 60 seconds long because of computer memory limitations.

Modern projects in music have far more options for finding the datasets to train new models. Let’s look at a few recent projects in the following categories of machine learning music applications:

- Transcribing audio recordings into music notation

- Classifying music for listener recommendations

- Composing original music

- Following a live performance against a written score

Transcription

Sharing original music with an audience is not only done through recorded music, but music notation as well. Musicians can perform another composer’s song based on sheet music without ever hearing the original piece. The “Non-Local Musical Statistics as Guides for Audio-to-Score Piano Transcription” (Shibataa et al., 2020) project attempted to train a machine learning model to transcribe an audio recording of a song into music notation. To train the model a dataset containing classical music called the MIDI Aligned Piano Sounds database was used. MAPS has the audio recording (wav file) of each song and the corresponding MusicXML file representing the score.

MIDI: Musical Instrument Digital Interface. This is a standard protocol where musical instruments can communicate with each other or with computers. A MIDI file contains multiple tracks that define the instrument patch, the notes, volume, resonance.

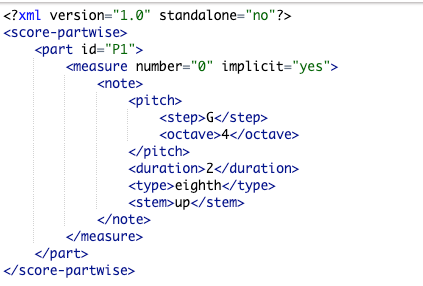

MusicXML: An XML format file to represent Western music notation. The standard format is used by computer software to create and read musical scores.

Classical music recordings are readily available as well as transcriptions. The use case for this application was to have the model convert popular music into musical notation — something that isn’t often available to consumers. Training the model on only classical music would have resulted in poor performance in the real world. The researchers appended their dataset with two additional datasets:

- Print Gakufu by Yamaha Entertainment — this site has audio files of piano covers scraped from YouTube of JPop, and their corresponding transcriptions.

- MuseScore — this site has mp3 audio files of music covers uploaded by users and their corresponding transcriptions in MusicXML format. There are approximately 340,000 available pop songs.

Classification

Music streaming services are a great way to find new music, but finding that new favourite song can be daunting. Algorithms that recommend music based on a listeners’ preferences can introduce listeners to new artists. Traditionally, music is recommended to a user based on other user’s that have a similar play history.

The “Leveraging the structure of musical preference in content-aware music recommendation” project (Magron & F´evotte, 2020) involved recommending music on how it made the listener feel. The Echo Nest Taste Profile dataset was used to find songs that had a large number of listen counts from listeners that used the service frequently. The Taste Profile dataset has a reference key to the Million Song Dataset, which provides the actual audio file (mp3) of each song.

The research team then added features to this combined dataset so that a song could be categorized on how it made a listener feel. The Essentia toolbox was used to extract descriptors such as happy or sad or danceable. The model was then trained on what song descriptors would predict if a user would listen to a song multiple times. Now, for example, a user would be recommended a new song that is described as ‘happy’, if they were currently listening to a lot of ‘happy’ songs.

Composition

While original compositions by computers have been successfully created through projects like Brain.fm, the “Medley2K: A Dataset of Medley Transitions” project (Faber et al., 2020) was completed using machine learning to create the bridge of music used in song medleys. The bridge is when a musician performs a group of songs, and add a few bars of music to transition from one song to the next. To train a model to automatically compose these small transition pieces between songs for a user, a medley data set was needed.

As in the Transcription project, the music data was pulled from the MuseScore website. Songs were filtered by type to only include medleys that had more than a ‘one-note’ bridge or silent bridge between songs. Since the medleys were stored in Music XML files, the data scientists could add new features based on where the transition of each medley started and ended.

In this case, these transition annotations were not done manually, but done by using a machine learning model. Once these annotations were complete, and added as a new feature to the MuseScore dataset, this became the training data for a new model that would compose original transitions for a given medley of songs.

Score Following

Score following is when a computer can identify where, in its musical score, a current performance is at just by listening to the piece. Applications for this type of model are used for:

- automatic page turning for pianists

- producing contextual information like translation of Opera lyrics

- controlling cameras during a live performance

This can’t be solved through time stamps, since live performances involve unexpected pauses, or applause breaks or even performance errors like playing incorrect notes.

The “Real-Time Audio-to-Score Alignment of Music Peformances” project (Nakamura et al., 2015) used the Bach10 dataset to train their model to follow an audio recording and determine where in the score the song was. The Bach10 dataset consists of audio recordings of ten pieces by J. S. Bach. The features included are the audio file (wav), a midi file of the score and a text file that maps pitch and time at each point in the audio file to the midi file.

Because there are only 10 pieces included in the dataset, each piece was split up in to 25 second intervals to create a larger training dataset. These intervals of each song were perfect performances, but the researchers needed to create examples of inconsistent recordings. To do this, they updated the audio files to have pauses that didn’t match the midi file, as well as incorrect notes. Incorrect notes were usually a semi-tone off or a perfect 12 above a given note to replicate a typical performance error.

Conclusion

There are many use cases for using machine learning with music. Having access to these diverse data sets will continue to inspire new projects in this field. Learning from the projects reviewed above, it’s clear that combining existing datasets to create new insights or even augmenting existing datasets with synthetic data can add value when training a machine learning model.

More projects like this can be found by looking through past conferences organized by the International Society for Music Information Retrieval (ISMIR) on their website https://www.ismir.net/conferences/

References

Non-Local Musical Statistics as Guides for Audio-to-Score Piano Transcription

K. Shibata, E. Nakamura, K. Yoshii . August 2020 . ArXiv e-Prints

Leveraging the structure of musical preference in content-aware music recommendation

Magron, Paul; Févotte, Cédric . October 2020 . ArXiv e-Prints

Medley2K: A Dataset of Medley Transitions

Faber, Lukas; Luck, Sandro; Pascual, Damian; Roth, Andreas; Brunner, Gino; Wattenhofer, Roger

August 2020 . ArXiv e-Prints

Real-Time Audio-to-Score Alignment of Music Performances Containing Errors and Arbitrary Repeats and Skips

Nakamura, Tomohiko; Nakamura, Eita; Sagayama, Shigeki . December 2015. ArXiv e-Prints